I find that .NET applications are often split into way to many assemblies. I am guilty of doing this myself, but lately I have been using namespace separation to a larger extent. There are pros and cons to both methods, for example assemblies allows easy dependency cycle detection, on-demand loading, stricter encapsulation (internal keyword). But multiple project solutions also slows down compilation considerably, makes visual studio + plugins (resharper) less responsive.

For a more detailed list of pros and cons Patrick Smacchia has a great article on controlling dependencies. My main objection to some multi-project solutions is that many of the assemblies are unnecessary, the added flexibility and dependency control is not needed and just adds complexity.

An example of a solution structure containing to many assemblies:

- App.Services

- App.Services.DataContracts

- App.Services.ServiceContracts

- App.Services.MessageContracts

- App.Services.TypeConverters

- App.Services.Validators

I reviewed a solution recently containing 31 projects, the list above is similar to what I found in this solution. About half of the 31 projects only had 2-3 types in them! I remember seeing the same in the web service software factory templates, what is the point of having data contracts, service contracts and message contracts in separate assemblies? Sure this could be required in some special edge case, but unless you absolutely need to I don't see the point.

Controlling dependencies

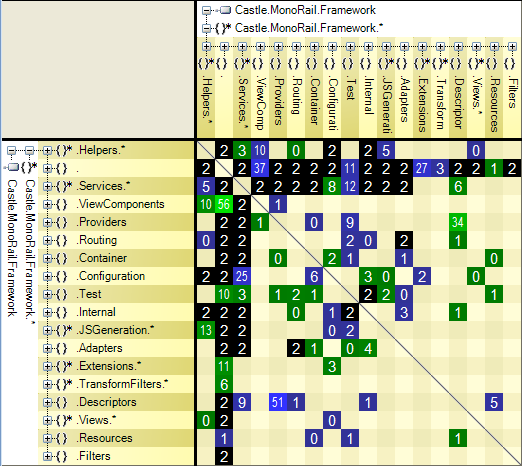

The standard build tools have built in dependency cycle checks for assemblies but not for namespaces. However NDepend can analyse dependencies within namespaces, here is a view of the namespace dependencies within Castle.MonoRail.Framework.

The dependency matrix generated by NDepend can be a little hard to interpret, at least I thought so at first. Each cell shows the number of types used by another namespace.

- Green cell means that there is a one directional dependency from the horizontal to the vertical namespace

- Blue cell means the opposite, that is a one directional dependency from the vertical to the horizontal namespace

- Black means a bi-directional dependency

So, simple rule, black cell bad, green/blue good. Well if was only that simple. If you have concrete implementations of interfaces in the same namespace as the interface you will have loosely coupled code, but a potential bidirectional dependency on the namespace. The solution is to place interfaces and implementations in separate namespaces. I find this useful sometimes but I don't think is it should be a general rule.

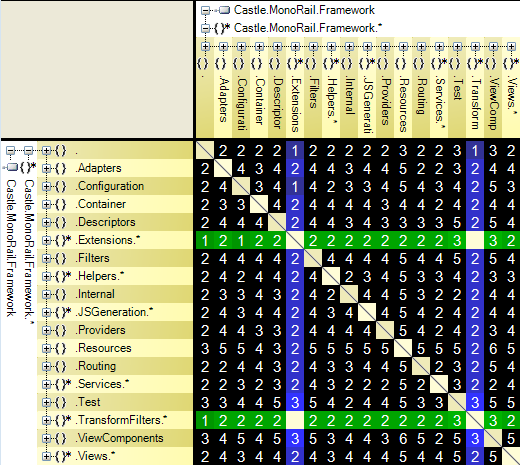

You can also view indirect dependencies in NDepend, which can highlight cyclic dependencies between namespaces:

Here we see that almost every namespace is part of a cyclic dependency. You can view what namespaces are involved by left clicking on a cell:

I am not passing judgement on the MonoRail code, I have studied and been inspired by the source code in Castle Windsor, MicroKernel and MonoRail for years, they contain some of the best written/designed .NET software I have seen.

The reason for the namespace dependencies in MonoRail are mostly caused by having interfaces and their implementations in the same namespace, for example a lot of concrete types in Castle.MonoRail.Framework reference types in deeper namespaces that in turn reference abstract types defined in Castle.MonoRail.Framework.

NDepend CQL

NDepend has this very powerful SQL like query language that can pinpoint design issues within your code. And as Jim Bolla pointed out you can create CQL queries that check that your code is not breaking separation of concern.

WARN IF Count > 0 IN SELECT METHODS WHERE IsUsing "System.Web" AND IsUsing "System.Data.SqlClient"

This query selects all methods that use types from System.Web and System.Data.SqlClient, if this query returns a method you know there is something wrong! You can also create queries that check for bidirectional namespace dependencies:

WARN IF Count > 0 IN SELECT NAMESPACES WHERE IsDirectlyUsing "MyNamespace" AND IsDirectlyUsedBy "MyNamespace"

These queries can define constraints that you then can use in your build process. NDepend can at first be a little daunting because it can extract so much information that there is risk for information overload, but it can be a really powerful tool for maintaining code quality and as an aid for refactoring.