It is not uncommon when when writing tests that you want check that the correct items with correct data exists in a list. You can do this in many ways, most of which I have found to be problematic at times.

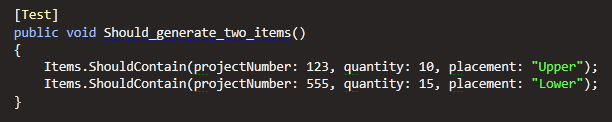

Example:

This approach forces the item order to be the one you expect. This can be overcome by sorting the list before. Another approach that I do not like, but have seen a lot:

This approach do not care about the order of the items, but will not generate instructive errors. When the test fails you get no hint of which property was wrong, was there any items in the list, was it the project number or the quantity that caused the mismatch?

I have been working on a reporting story that denormalized a big document into a single class with a large number of properties. This required a number of tests that checked the items and their generated properties. However I wanted a nice syntax and good instructive test errors that explain what property caused the mismatch and what alternative values that property was found with.

I set out to create this in a generic way to be reusable in other tests. And ended up with this:

Lets say there only exists an item with project number 123 and placement “Front”, and maybe 10 rows with other project numbers, then the fist line would throw a test fail like this:

The important point here is that not only do I get what property caused the mismatch but also the alternative values for that value given the project number filter of 123 (that is I do not get all values for the mismatched property).

This becomes useful when you have many properties and multiple rows you want to check. Because all filters that results in hits will be applied, and all that give no hits will not be used, so you can present an error that includes the mismatches, but also found alternative values for the mismatched properties. I am not sure if I am making any sense.

Maybe the filter logic will help, CollectionTestHelper:

I try to filter the list using the property function and value, if no hits are found I revert to the “unfiltered” list and add the property expression and value to a dictionary. I then revert to the “unfiltered” list, which is a bad term as it is the list with all matching filters applied.

The complete code for the CollectionTestHelper look at this gist: https://gist.github.com/1509663

In one case I made a helper method in the test class that has 14 optional parameters, so I can get this syntax:

Example:

Optional parameters are great for making tests and setups clearer.