The only part of the Oslo presentations at PDC that caught my attention was the MGrammar language (Mg).

The Mg language provides simple constructs for describing the shape of a textual language – that shape includes the input syntax as well as the structure and contents of the underlying information

The interesting part of Mg is how it combines schema, data transformation, and functional programming concepts to define rules and lists. Creating and designing a language is hard and requires some knowledge of how parsers work, as Frans Bouma, and Roger Alsing has pointed out, Mg and Oslo is not going to change that. I haven't work professionally with language parsers, I have written a C like language compiler using LEX and YACC, but that was many years ago. One of the most popular tools for language creation today is ANTLR, it would be great if someone knowledgeable in both ANTLR and Mg would write a comparison.

Anyway, I was intrigued by Mg so I decided to play around with it. I decided to create a simple DSL over the WatiN browser automation library. I wanted to be able to execute scripts that looked like this:

test "Searching google for watin"

goto "http://www.google.se"

type "watin" into "q"

click "btnG"

assert that text "WatiN Home" exists

assert that element "res" exists

end

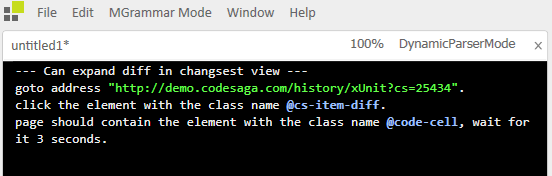

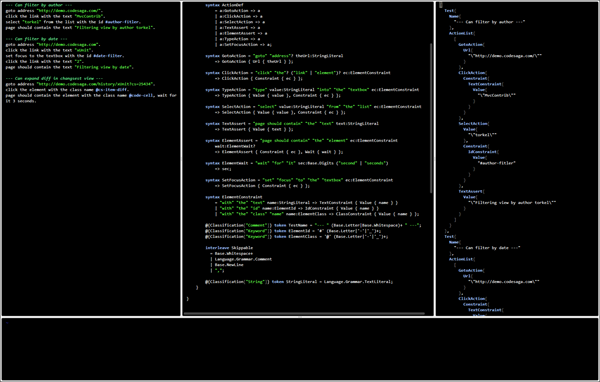

Maybe not the best possible DSL for browser testing, one could probably come up with something even more natural sounding. But it will be sufficient for now. To start creating the language specification I started Intellipad (a application that is included in the Oslo CTP). To get the nice three pane view, with input, grammar, and output window is kind of tricky. First switch the current mode to MGrammarMode, this is done by pressing Ctrl+Shift+D to bring up the minibuffer, then enter "SetMode('MGMode')". Now the MGrammar Mode menu should be visible, from this menu select "Tree Preview", this will bring up a open file dialog, in this dialog create an empty .mg file and select that file.

I entered my goal DSL in the dynamic parser window and began defining the syntax and data schema. After an hour of trial and error I arrived at this grammar:

module CodingInstinct {

import Language;

import Microsoft.Languages;

export BrowserLang;

language BrowserLang {

syntax Main = t:Test* => t;

syntax Test = TTest name:StringLiteral a:ActionList TEnd

=> Test { Name { name }, a };

syntax ActionList

= item:Action => ActionList[item]

| list:ActionList item:Action => ActionList[valuesof(list), item];

syntax Action

= a:GotoAction => a

| a:TypeAction => a

| a:ClickAction => a

| a:AssertAction => a;

syntax GotoAction = TGoto theUrl:StringLiteral => GotoAction { Url { theUrl } };

syntax TypeAction = TType text:StringLiteral TInto id:StringLiteral

=> TypeAction { Text { text }, ID { id } };

syntax ClickAction = TClick id:StringLiteral => ClickAction { ID { id } };

syntax AssertAction =

TAssert TText text:StringLiteral TExists => AssertAction { TextExists { text } }

|

TAssert TElement element:StringLiteral TExists => AssertAction { ElementExists { element } } ;

@{Classification["Keyword"]} token TTest = "test";

@{Classification["Keyword"]} token TGoto = "goto";

@{Classification["Keyword"]} token TEnd = "end";

@{Classification["Keyword"]} token TType = "type";

@{Classification["Keyword"]} token TInto = "into";

@{Classification["Keyword"]} token TClick = "click";

@{Classification["Keyword"]} token TAssert = "assert that";

@{Classification["Keyword"]} token TExists = "exists";

@{Classification["Keyword"]} token TText = "text";

@{Classification["Keyword"]} token TElement = "element";

interleave Skippable

= Base.Whitespace+

| Language.Grammar.Comment;

syntax StringLiteral

= val:Language.Grammar.TextLiteral => val;

}

}

I have have no idea if this is a reasonable grammar for my language or if it can be written in a simpler/smarter way. The grammar generates this M node graph:

[

Test{

Name{

"\"Search google for watin\""

},

ActionList[

GotoAction{

Url{

"\"http://www.google.se\""

}

},

TypeAction{

Text{

"\"asd\""

},

ID{

"\"google\""

}

},

ClickAction{

ID{

"\"btnG\""

}

},

AssertAction{

TextExists{

"\"text\""

}

},

AssertAction{

ElementExists{

"\"asd\""

}

}

]

}

]

The problem I had now was how to parse and execute this graph, I could not find any documentation for how to generate C# classes from M schema. What is included in the CTP is a C# library to navigate the node graph that the language parser generates. This node graph is not very easy to work with, I wanted a GotoAction to be automatically mapped to a GotoAction class, the TypeAction to a TypeAction class, etc. To accomplish this I wrote a simple M node graph deserializer.

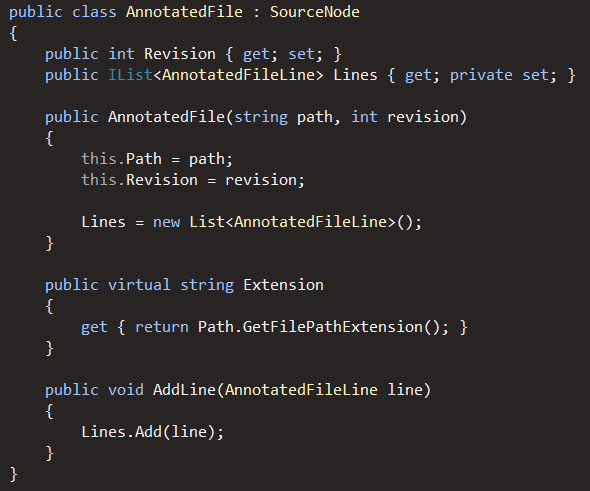

This is the AST I want the M node graph to deserialize to:

public class Test

{

public string Name { get; set; }

public IList<IAction> ActionList { get; private set; }

public Test()

{

ActionList = new List<IAction>();

}

}

public interface IAction

{

void Execute(IBrowser browser);

}

public class GotoAction : IAction

{

public string Url { get; set; }

public void Execute(IBrowser browser)

{

browser.GoTo(Url);

}

}

It was quite tricky to write a generic deserializer, mostly because the M node object graph is kind of weird (Nodes, Sequences, Labels, Values, EntityMemberLabels, etc). Here is the code:

public class MAstDeserializer

{

private GraphBuilder builder;

public MAstDeserializer()

{

this.builder = new GraphBuilder();

}

public object Deserialze(object node)

{

if (builder.IsSequence(node))

{

return DeserialzeSeq(node).ToList();

}

if (builder.IsNode(node))

{

return DeserialzeNode(node);

}

return null;

}

private object DeserialzeNode(object node)

{

var name = builder.GetLabel(node) as Identifier;

foreach (var child in builder.GetSuccessors(node))

{

if (child is string)

{

return UnQuote((string)child);

}

}

var obj = Activator.CreateInstance(Assembly.GetExecutingAssembly().FullName, "WatinDsl.Ast." + name.Text).Unwrap();

InitilizeObject(obj, node);

return obj;

}

private void InitilizeObject(object obj, object node)

{

foreach (var child in builder.GetSuccessors(node))

{

if (builder.IsSequence(child))

{

foreach (var element in builder.GetSequenceElements(child))

{

AddToList(obj, child, element);

}

}

else if (builder.IsNode(child))

{

obj.SetPropery(builder.GetLabel(child).ToString(), DeserialzeNode(child));

}

}

}

private void AddToList(object obj, object parentNode, object element)

{

var propertyInfo = obj.GetType().GetProperty(builder.GetLabel(parentNode).ToString());

var value = propertyInfo.GetValue(obj, null);

var method = value.GetType().GetMethod("Add");

method.Invoke(value, new[] { DeserialzeNode(element) });

}

private IEnumerable<object> DeserialzeSeq(object node)

{

foreach (var element in builder.GetSequenceElements(node))

{

var obj = DeserialzeNode(element);

yield return obj;

}

}

private object UnQuote(string str)

{

return str.Substring(1, str.Length - 2);

}

}

I guess in future versions of Oslo previews something like the above deserializer will be included as it is essential for creating executable DSLs. Maybe the Oslo team has another option for doing this, for example generating Xaml from the node graph which can then initialise your AST.

So how do we compile and run code in our new WatiN DSL language? First we need to compile the grammar .mg file into a .mgx file, this is done with the MGrammarCompiler, we can then use the .mgx file to create a parser, the parser will generate a node graph which we will deserialize into our custom AST.

public class WatinDslParser

{

public object Parse(string code)

{

return Parse(new StringReader(code));

}

public object Parse(TextReader reader)

{

var compiler = new MGrammarCompiler();

compiler.FileNames = new[] { "BrowserLang.mg" };

compiler.Target = Target.Mgx;

compiler.References = new string[] { "Languages", "Microsoft.Languages" };

compiler.Execute(ErrorReporter.Standard);

var parser = MGrammarCompiler.LoadParserFromMgx("BrowserLang.mgx", "CodingInstinct.BrowserLang");

object root = parser.ParseObject(reader, ErrorReporter.Standard);

return root;

}

}

The reason I compile the grammar from code every time I run a script is so I can easily change the grammar and rerun without going through a separate compiler step. The Parse function above returns the M node graph. Everything is glued together in the WatinDslRunner class:

public class WatinDslRunner

{

public static void RunFile(string filename)

{

var parser = new WatinDslParser();

var deserializer = new MAstDeserializer();

using (var reader = new StreamReader(filename, Encoding.UTF8))

{

var rootNode = parser.Parse(reader);

var tests = (IEnumerable)deserializer.Deserialze(rootNode);

foreach (Test test in tests)

{

RunTest(test);

}

}

}

public static void RunTest(Test test)

{

Console.WriteLine("Running test " + test.Name);

using (var browser = BrowserFactory.Create(BrowserType.InternetExplorer))

{

foreach (var action in test.ActionList)

{

action.Execute(browser);

}

}

}

}

If you have problems with the code above, please remember that the code in this post is just an experimental spike to learn MGrammar and the M Framework library. If you want to experiment with this yourself, download the code+solution: WatinDsl.zip.

Summery and some other thoughts on Oslo/Quadrant

It was quite a bit of work going from my textual DSL to something executable. The majority of the time was spent figuring out the M node graph and how to parse and deserialize it, writing the grammar was very simple. MGrammar will definitely make it easier to create simple data definition languages that could replace some existing xml based solutions, but I doubt that it will be widely used in enterprise apps for creating executable languages. Maybe it is more suited for tool and framework providers. It is the first public release so a lot will probably change and be improved so it is to early to say how much of an impact M/Oslo will have for .NET developers.

I got home from PDC quite puzzled over Oslo and the whole model-driven development thing. They only talked about data, data, data, I don't think they mentioned the word BEHAVIOR even once during any Oslo talk that I attended, to me that is kind of important :) I asked others about this and most agreed that they did not understand the point of Oslo, or how it would improve/change application development significantly.

Sure I found Quadrant to be a cool application that could potentially replace some Excel / Access solutions but what else? In what way is Quadrant interesting for application developers? It would be interesting to get some comments on what others think about MGrammar, Quadrant & Model-driven development :)