I thought I would do a best of post, as many others seems to be doing it.

Most viewed posts (in order):

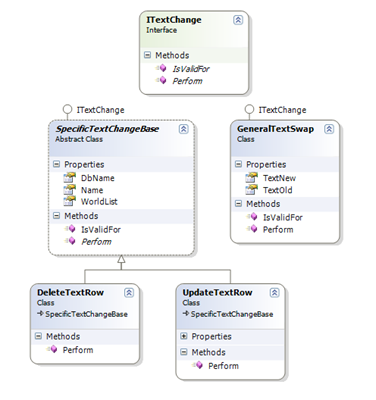

- IoC Container Benchmark - Unity, Windsor, StructureMap and Spring.NET

- NHibernate 2.0 Events and Listeners

- JQuery and Seperation of Concerns

- Cleanup your html with JQuery

- Breadcrumb menu using JQuery and ASP.NET MVC

- Url Routing Fluent Interface

- Creating a WatiN DSL using MGrammar

- View Model Inheritance

- NHibernate 2.0 Statistics and a MonoRail filter

But in retrospect I regret that I did not start sooner, during 2005-2007 I was a lead developer on a multi-tenant B2B system where I did some interesting work with url rewriting with WebForms (before there was much information about it), started using NHibernate and Castle Windsor, SAP integration logging using AOP, etc, in short I had a lot to blog about that could have been valuable and helpful to the .NET community.

I am very glad that I eventually started blogging because it has been a very fun, rewarding and learning experience. One of the reasons that I eventually started was the extremely easy setup that Google's blogger service provided where you could register a domain and start blogging in a mater of minutes. Google's blogger service has been pretty great as a way to get started, the only problem is the bad comment system and some lack of flexibility. I will probably be moving to a hosted solution where I can run and configure the blogging software myself during 2009 but right now it is not a top priority.

I want thank everyone who subscribes or reads this blog, I have been very pleasantly surprised by the amount of people who subscribe, it is very motivating to see that people find value in things I write and keeps me wanting to write more and better.

Merry Christmas & Happy New Year