If you have worked with DTS or SSIS packages you probably know that they can quickly become painful to work with, especially concerning versioning, logging and above all deployment.

I recently tried Ayende´s open source ETL framework RhinoETL, it tackles the ETL problem from a completely different angle compared to DTS / SSIS. At it's heart RhinoETL is a very simple .NET framework to handle an ETL process, the key components are processes, pipelines and operations.

The process that I needed was a very simple one, namely to update a text table stored in multiple databases. The update could be of different types, for example swap every occurrence of a text translation to another and delete or update a specific row. In previously releases this was handled by writing manual update scripts. The release that I am working on currently however requires extensive changes to the texts in these tables spread over many databases and writing repetitive SQL scripts was not something that I felt doing. It felt like a good opportunity to try RhinoETL.

I began writing this input operation:

public class ReadWordList : InputCommandOperation { public ReadWordList(string connectionStringName) : base(connectionStringName) { } protected override Row CreateRowFromReader(IDataReader reader) { return Row.FromReader(reader); } protected override void PrepareCommand(IDbCommand cmd) { cmd.CommandText = "SELECT * FROM Wordlists"; } }

This is the first operation, its responsibility is to fill the pipeline with rows from the Worldlists table. The next operation is the one updating the rows. It takes as input a list of ITextChange instances that is the object that performs the change.

public class TextChangeOperation : AbstractOperation { private IList<ITextChange> changes; public TextChangeOperation(IList<ITextChange> changes) { this.changes = changes; } public override IEnumerable<Row> Execute(IEnumerable<Row> rows) { foreach (var row in rows) { foreach (var change in changes) { if (change.IsValidFor(row)) change.Perform(row); } yield return row; } } }

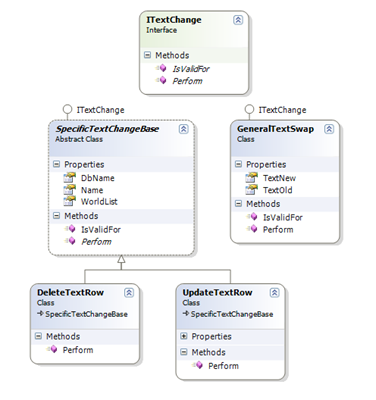

There is a class hierarchy representing the different types of text changes:

The code for the GeneralTextSwap class is very simple:

public class GeneralTextSwap : ITextChange { public string TextOld { get; set; } public string TextNew { get; set; } public bool IsValidFor(Row row) { var text = ((string) row["Text"]).Trim(); return text == TextOld; } public void Perform(Row row) { row["TextOld"] = row["Text"]; row["Text"] = TextNew; } }

The point of storing the old text value is that I have an operation after the TextChangeOperation that logs all changes to a csv file. The changes for a specific release is then just defined as static list on a static class. For example:

public class ReleaseChanges_For_Jan09 { public static IList<ITextChange> List; static ReleaseChanges_For_Jan09() { List = new List<ITextChange>() { new GeneralTextSwap() { TextOld = "some old link value", TextNew = "some new link value" }, new UpdateTextRow() { DbName = "DN_NAME", WorldList = "USER_PAGE", Name = "CROSS_APP_LINK", TextNew = "New link value" }, new DeleteTextRow() { DbName = "DN_NAME_2", WorldList = "SOME_PAGE", Name = "SOME_LINK" } } } }

The above is just an example in reality I have hundreds of general and specific changes, and yes this is legacy system which handles text and links very strangely. The above code could easily be handled by a simple sql script with about the same number of lines of TSQL code, the benefit of placing this inside an ETL written in C# is that I can easily reuse the change logic over multiple databases and I also get great logging and tracing of all changes. The point of this post is to show how easy it can be to use OOP to model and abstract an ETL process using RhinoETL. Even though this ETL process i very simple it serves as good example for showing how a TSQL script, DTS or SSIS packages can be rewritten and simplified using the power of an object oriented language.